More or less coincidentally I stumbled over the topic of this post a couple of weeks ago in my next endeavor to raise the success rate of the MS test suite on our solution. A task being picked up every once in while, next to all the normal work.

The major approach so far in getting as much as possible MS tests working has been a statistical one: look for the most occurring error and get it solved; subsequently take the next most occurring and so on. In 1 week the success rate raised from 23% to 72%. One week more we did get it to almost 80%, i.e. 79%. After six weeks altogether, spread over a couple of months, we hit the 90%. As in many other cases the Pareto principle also did apply here, and thus the remaining 10% will take almost as much time to get it successful. And you could wonder if that’s worth the trouble. Well, this blog post could be seen as a proof for taking the trouble still. It has been worthwhile to continue to raise the success rate. And actually I deliberately continue for this very reason as we also started this project: to learn from the automated tests MS did conceive. And in the meanwhile we do get more tests working.

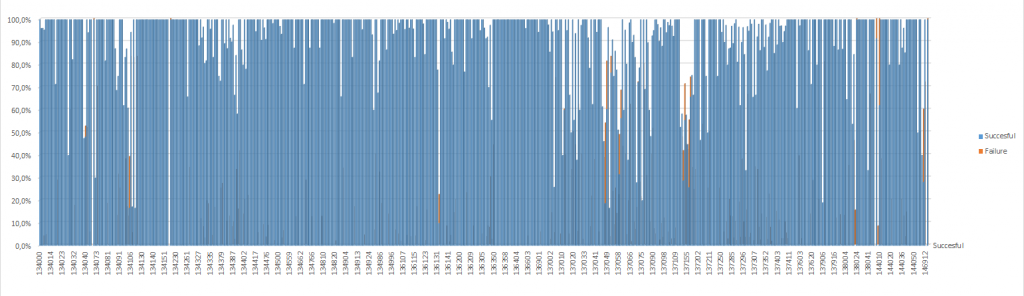

So how did I “stumble over”? Instead of using the statistical approach as pointed out above (“solving the most occurring error”) I looked at the left side of my percentage graph (that’s where you can find the lower test codeunits that hold the tests that are most meaningful to us; where those on the right are for a bigger part testing production and warehouse management, at this moment of lesser or no use at all to us):

And I focused on the white ditches between the blue. The deeper the lesser successful. And maybe the easier to get successful.

The one that struck my eye most was the one white all the way down. The narrow grand canyon. Or gorges du Tarn, being a Francophile. Do you see it? Somewhat left of the middle. 0% success in this test codeunit. It turned out to be COD134926 (Table Relation Test). To be honest I didn’t look at the name, and opened it in design mode to find out there was only one test function. Was I going to put my time on getting this fixed? So I looked at the codeunit name, I looked at the error thrown and studied the code closer.

Table Relation TestThe type of Table 23 in Field 50012 is incompatible with Table 50026 Field 1.[Test] ValidateFieldRelationCompatibility()LOCAL ValidateFieldRelation(VAR TempTableRelationsMetadata : TEMPORARY Record "Table Relations Metadata") : BooleanAha, it’s checking the technical validity of a table relation between source and target field that make up a table relation:

- do the data types match

- does the length of the fields match

Or as the in code explanation says:

// <Summary> Fields must have the exact length of the largest field they relate to.

// <Summary> Fields must have the same type as the fields they relate to. Exception: if it relates to both Code and Text it must be Text

Wow. We have been cleaning up our code a lot in the last year, but hadn’t been able to get this tackled, and now we did hit upon this nice gift. It pointed out – ouch, do I dare to say this – … more than 50 incompatible table relations. Needless to say these were injected in our code long, long ago. [emoticon:c28b2e4cc20f4ba28d1befdba6bed29c]

If, after all my previous posts on the test toolkit, you’re still looking for reasons to start using it. Now you have surely one (more), be it a small, and very useful one.