Together with Global Mediator we start a series of blogposts about QA for Dynamics channel. The idea was born when both I and my Global Mediator friends came to the same conclusion: test automation is still not really taking off in the Dynamics NAV/BC world, let alone quality assurance. So we sat down and started a joined project which we baptised: QA, the ugly duckling. Through this we want to sharpen our minds and at the same make you, our readers, part of it. Let’s see how this first stone makes more to get “rolling”. Be invited to challenge our statements and help us all grow to a next professional level.

Both Global Mediator and I will be posting our writings. Find theirs here: http://global-mediator.com/blog/

In the old days, the roles in ERP implementation were straight forward. Functional experts worked with users to make functional requirements. Developers would turn that to code. The result would be handed back to the users or functional experts for testing. This may not have been super cost-efficient, but it worked well while your update cycle was infrequent. With the move to more cloud-based solutions, the update cycle is becoming much more frequent. This dramatically increases the time and cost spent on testing, especially if a more substantial regression test is required. As a consequence, the old working model is ripe for improvement.

Testing challenges

In order to drive down the cost of frequent testing, some form of test automation seems in order. But this is actually easier said than done. The first challenge is that even the best test automation tools require some form of technical tweaking, which is not something many users can do. The second challenge is that most developers still seem to think of test automation as something that is done by others. And, even when they did pick up the challenge,then the test code units often only cover the specific application they work on. Not all processes that can run from/too different best in class on-line applications. The final challenge is that any form of test automation needs to be well structured and maintained over time.

A change in testing strategy may require an organizational rethink

So, in order for testing not to drive up the cost by sitting between groups of people that do not embrace the opportunity, a paradigm shift is needed. More specifically, we need to move from a pure testing mindset to a more quality assurance-based mindset. A mindset which considers the entire process and not just the end result. Herein lies the challenge. Note that in order to do that we need to not only introduce new methods, but we most likely need to redefine who is in charge of quality. This will change the way we have worked for decades.

“We need to move from a pure testing mindset to a more quality assurance-based mindset. A mindset which considers the entire process and not just the end result”.

– Nicolai Krarup, COO at Global Mediator

In a series of blogposts, we would like to share with you our thoughts on how to do that, interspersed with meaningful examples. Today we make a start upstream discussing why you would want to test your requirements.

Testing the requirements – or how to reduce the cost of fixing bugs

One of the primary disciplines in a software development process is the requirements specification. This should end up being a description of what the users want a certain piece of software to do, whether it is newly developed or an enhancement. Any flaws in this specification will result in software not meeting the initial user’s needs. Or paraphrasing Steven McConnell, in his much acclaimed book CODE Complete: If the user has asked for a Rolls Royce and the requirements specify a Pontiac Aztek, the result will clearly not meet the user’s bars.

It’s undoubtedly an open door that the success of a software development process highly depends on the quality of the requirements specification. Nevertheless many Dynamics NAV/BC implementation projects seem to suffer from a high degree of after go-live issues. Seemingly a major part of these issues can be traced back to flaws in the requirements, either being omissions or clear errors.

The relative cost of fixing bugs

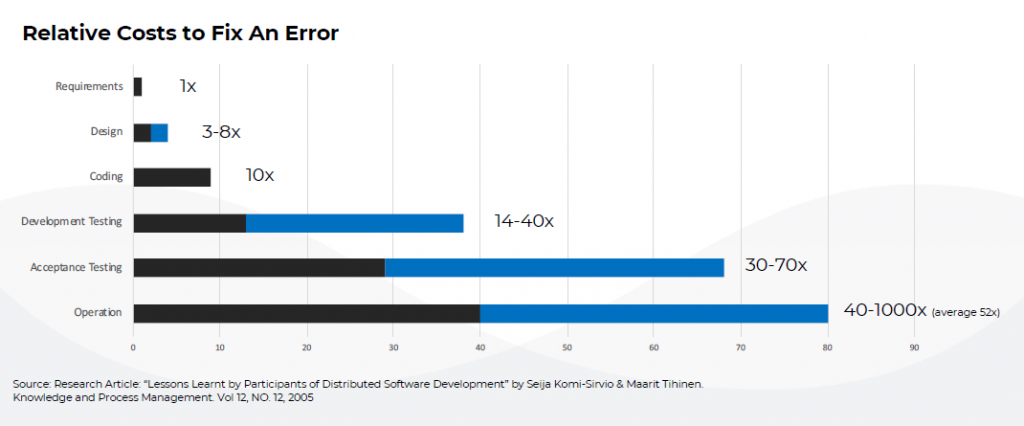

The downstream effect of an upstream defect goes with a cost of getting them fixed. This cost of fixing after go-live issues is substantially higher than when the flaws in the requirements, leading to these issues, would have been caught during the requirements specification.

In the aforementioned CODE Complete, Steven McConnell calculated the relative cost fixing a requirement defect after go-live to be 25 to 100. In other words: the cost of finding the defect after go-live is 25 to 100 times more costly than when finding it in the requirements specification.

“The cost of finding the defect after go-live is 25 to 100 times more costly than when finding it in the requirements specification”.

– Luc van Vugt, D365 BC MVP & QA-evangelist

Internal Microsoft studies around the same time talked about a relative cost of up to 1000. It is worth mentioning that this figure was not only accounting for the straightforward defect fixing but also for things like image damage. Both findings seemed to be confirmed by the research article by Seija Komi-Sirvio & Maarit Tihinen in June 2005 (“Lessons Learnt by Participants of Distributed Software Development”, Knowledge and Process Management, Vol 12, NO. 12).

Driving quality upstream

Given the above, driving quality upstream becomes a very cost-effective exercise. But how do you do that? How do you minimize the number of defects in your requirements?

By testing the requirements! By formalizing the way you review requirements based upon best practices. Or in a more actionable form: by setting quality bars against which you test the requirements.

Setting quality bars to test requirements against

A way of setting quality bars is using clear defined criteria to verify the requirements against. There are many people that have done this before you and me, and have shared their best practices. Using these will undoubtedly support in testing your requirements. As per ISO/IEC/IEEE 29148:2018 and 830-1998 – IEEE you would typically test against the following ten criteria:

- Correctness

- Completeness

- Clearness

- Unambiguity

- Verifiability

- Necessity

- Feasibility

- Scalability

- Consistency

- Prioritization

In our next posts we will elaborate on, and illustrate, each of these criteria.

Multidisciplinary involvement

To get an as rich as possible feedback with whatever methodology used make sure your reviews are a multidisciplinary effort. Include the different roles in the development project. As Karl Wiegers poses it: Requirements quality is in the eye of the reader, not the author.

Apart from the impact on the requirements as such it simultaneously creates a higher degree of involvement of multiple disciplines, i.e. the people involved.

Hope you will bear with us for our next posts.